At the end of my first semester teaching an undergraduate class of about 200 students in Spring 2012, I had two full boxes of un-collected homework assignments on the floor of my office. During the semester, students submitted hand-written solutions to homework problems that were graded by teaching assistants over the course of one week, and returned to students with extensive feedback a week after the deadline. Unfortunately, about 1/3 of the students never collected their graded homework, and hence were not able to get any useful feedback about their understanding of the course material. In the following semester, I decided to start using existing online homework systems, experimenting with McGraw-Hill Connect and Pearson MasteringEngineering. Unfortunately, the available questions were not always adequate to my teaching style, and I didn’t have the flexibility to decide on partial credit points or the type of feedback provided.

In Fall 2015, I started creating my own homework assignments using PrairieLearn, an online problem-driven learning system that allows me to author problem generators, which creates randomly parameterized problem instances. Problem generators are typically written to create problem instances with different numeric values or other changes so that the correct answer is different.

Homework and exams assigned in PrairieLearn consist of a set of problem generators (examples here). The grading method and retry options for these assessments are different and summarized below:

Homework assignments:

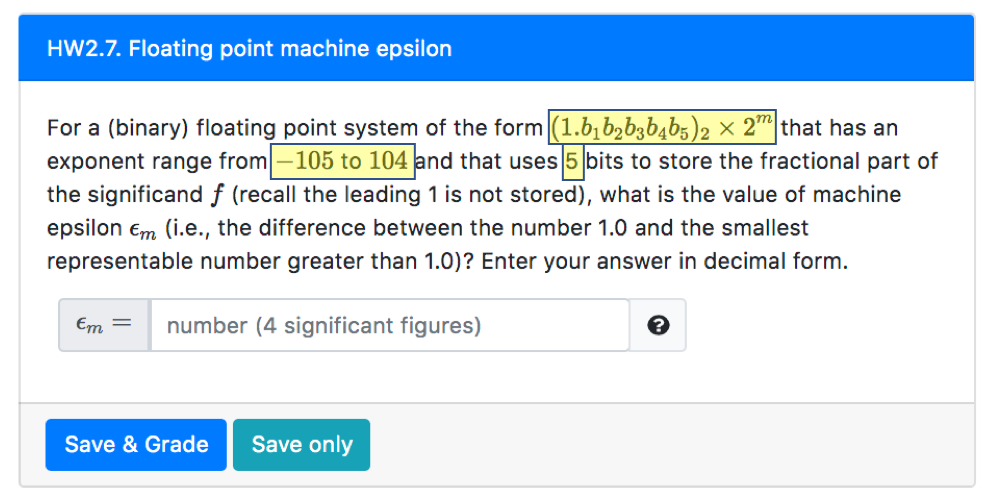

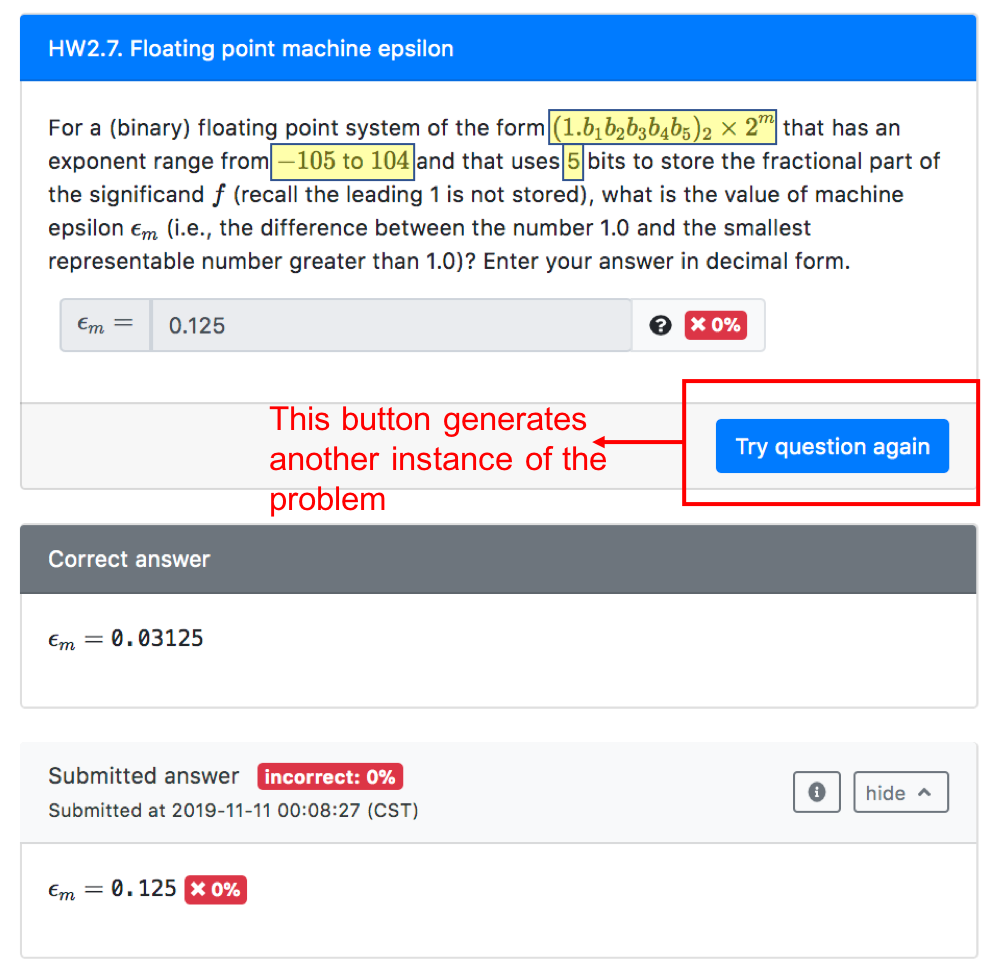

A typical problem generator will have parameters that are randomized, creating different problem instances. Figure 1 illustrates a question from a problem generator in which the highlighted variables were parametrized.

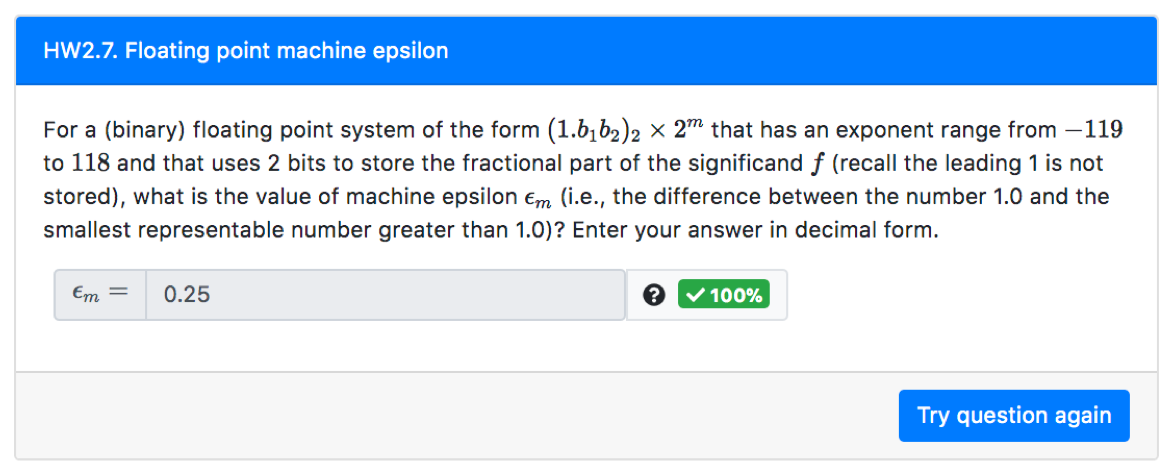

In a homework assignment, if a student gets a question marked as incorrect (Fig. 2), the correct answer is displayed and the student cannot submit another answer for the same problem instance. Instead, students can attempt another instance by clicking “Try question again”, as illustrated in Fig. 3.

Students can still generate other instances after the question is marked as correct, as shown in Fig. 3. To encourage students to practice with more than one version of the question, I often give students points for answering the question correctly more than once. If the question is not fully randomized (as in the case with some multiple-choice questions), then I don’t show the correct answer after each attempt, and they only get points for correctness once.

Exams and/or short quizzes:

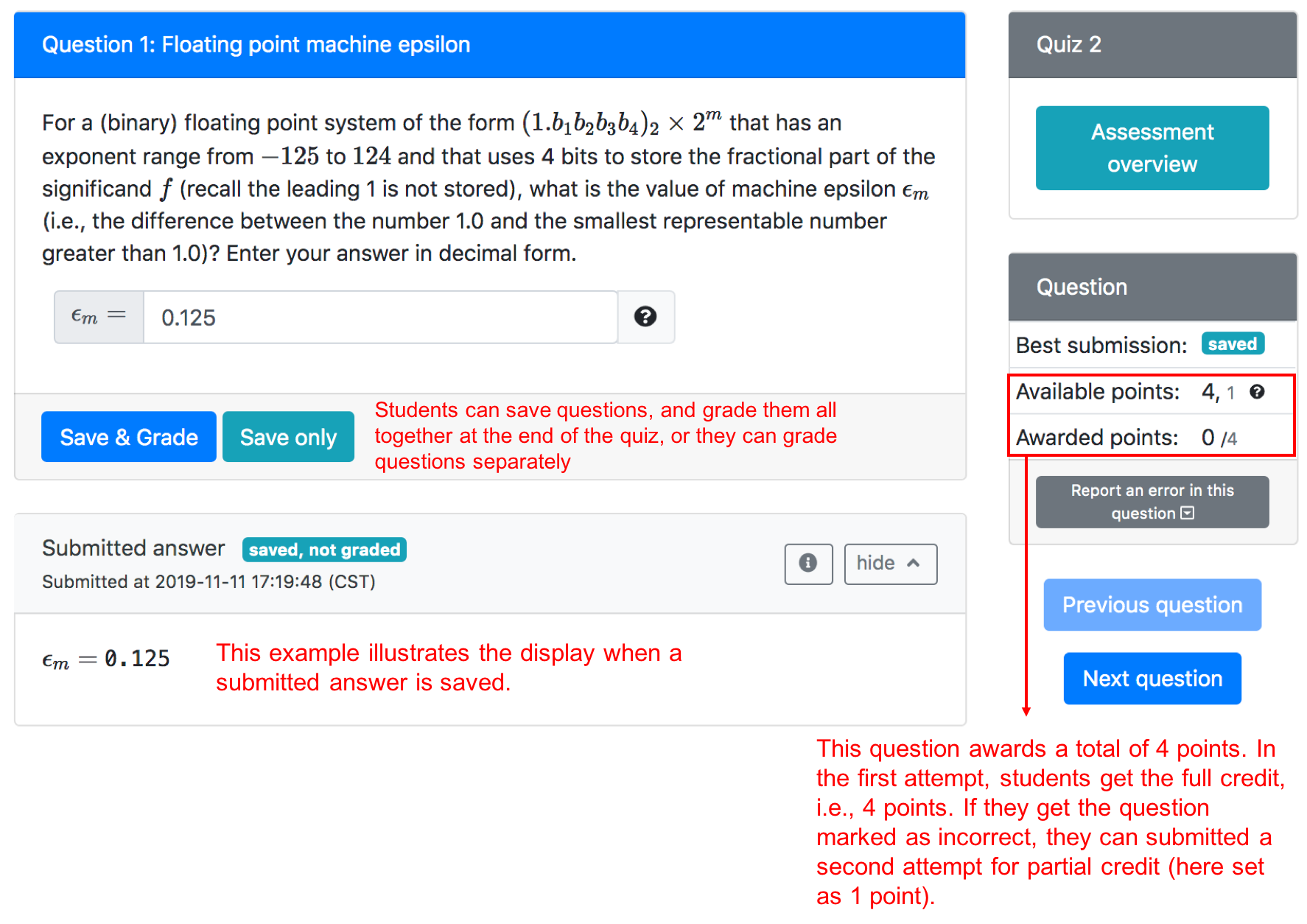

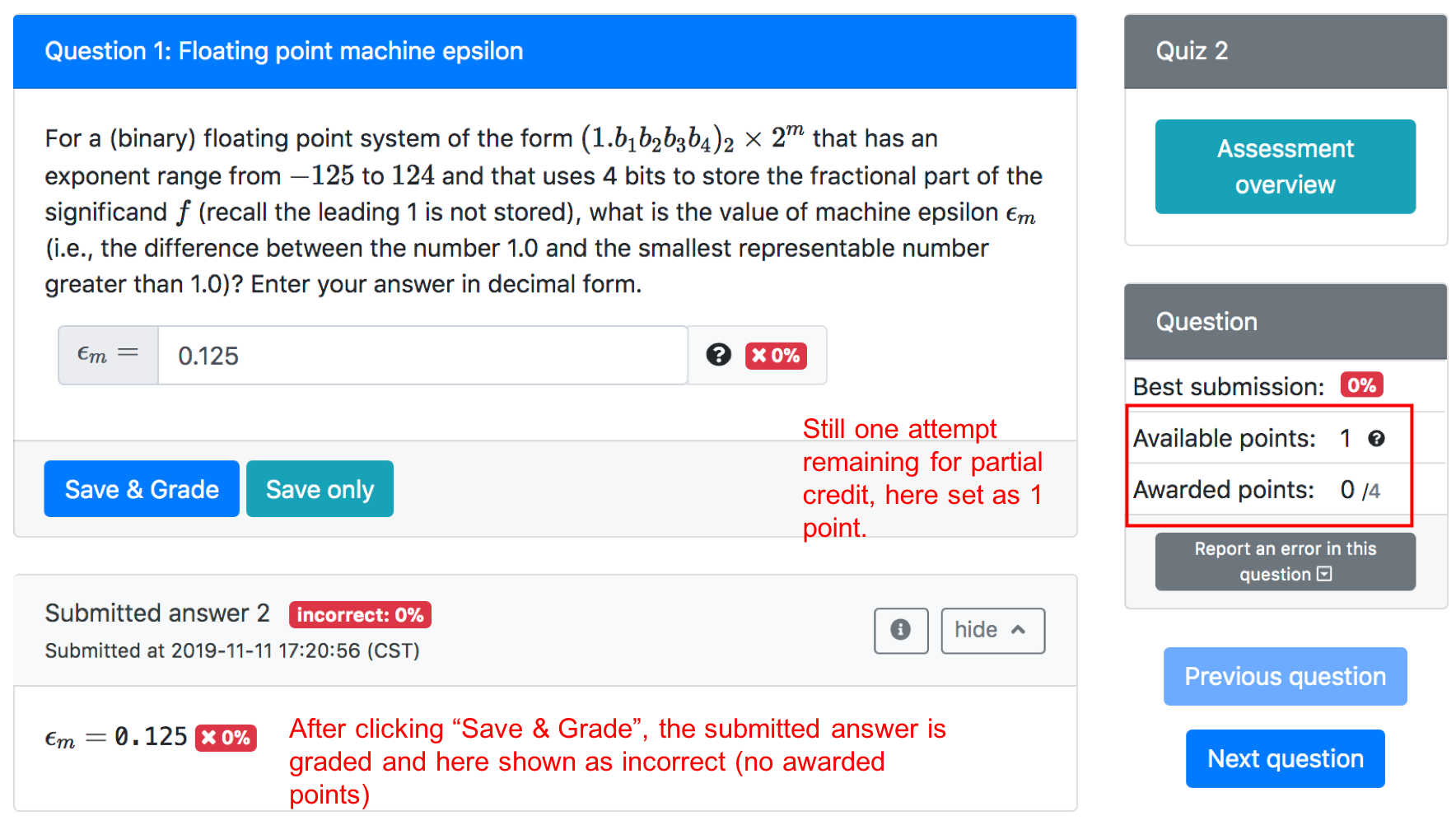

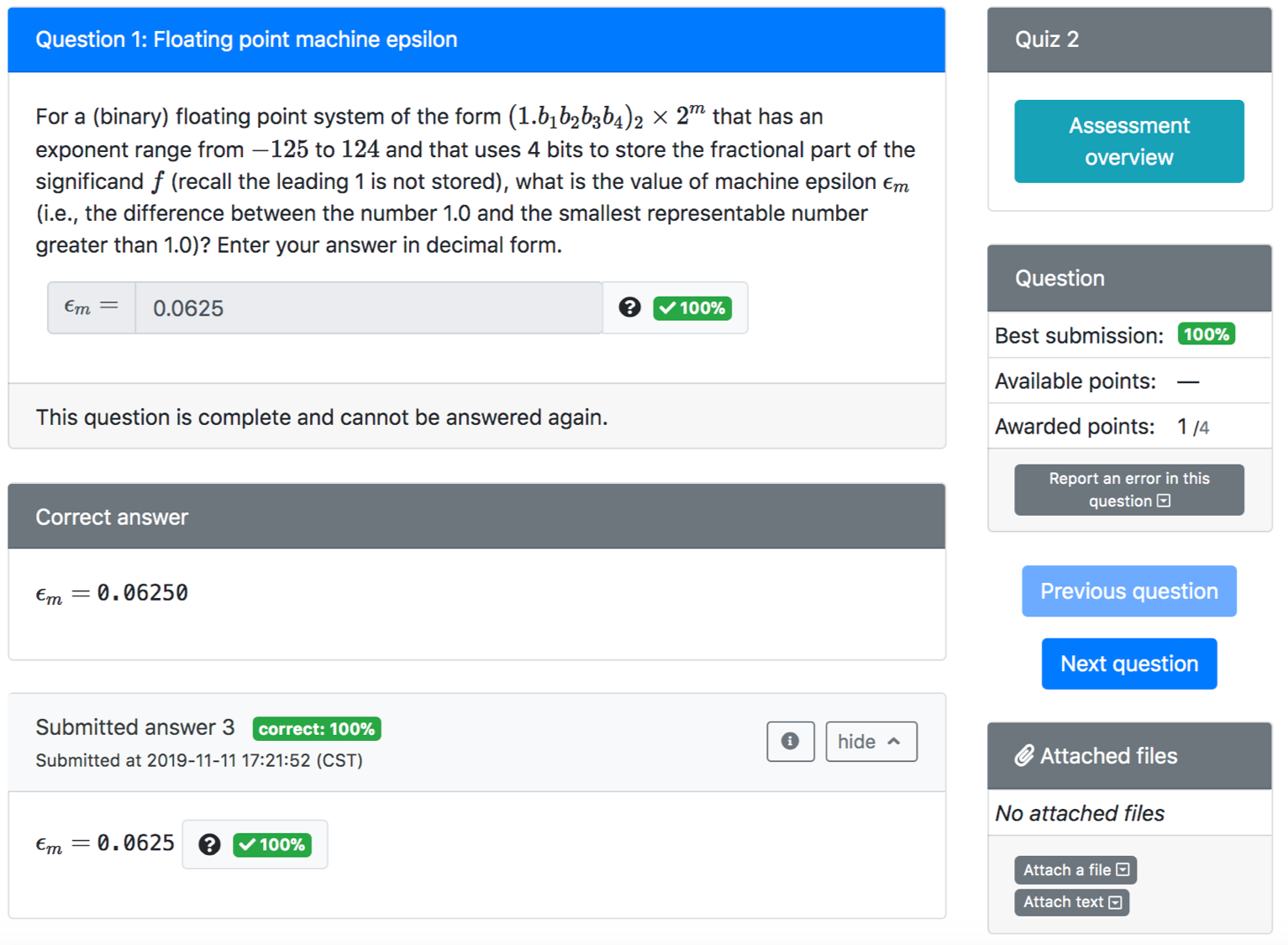

The same problem generator included in an exam will have a different behavior when creating problem instances. Figure 4-6 illustrate another instance of the same problem appearing on a quiz. In this question, students have two attempts to get the question correctly. A student who has an incorrect submission in the first attempt can try the same question again (and not another instance) for a reduced amount of points.